Introduction

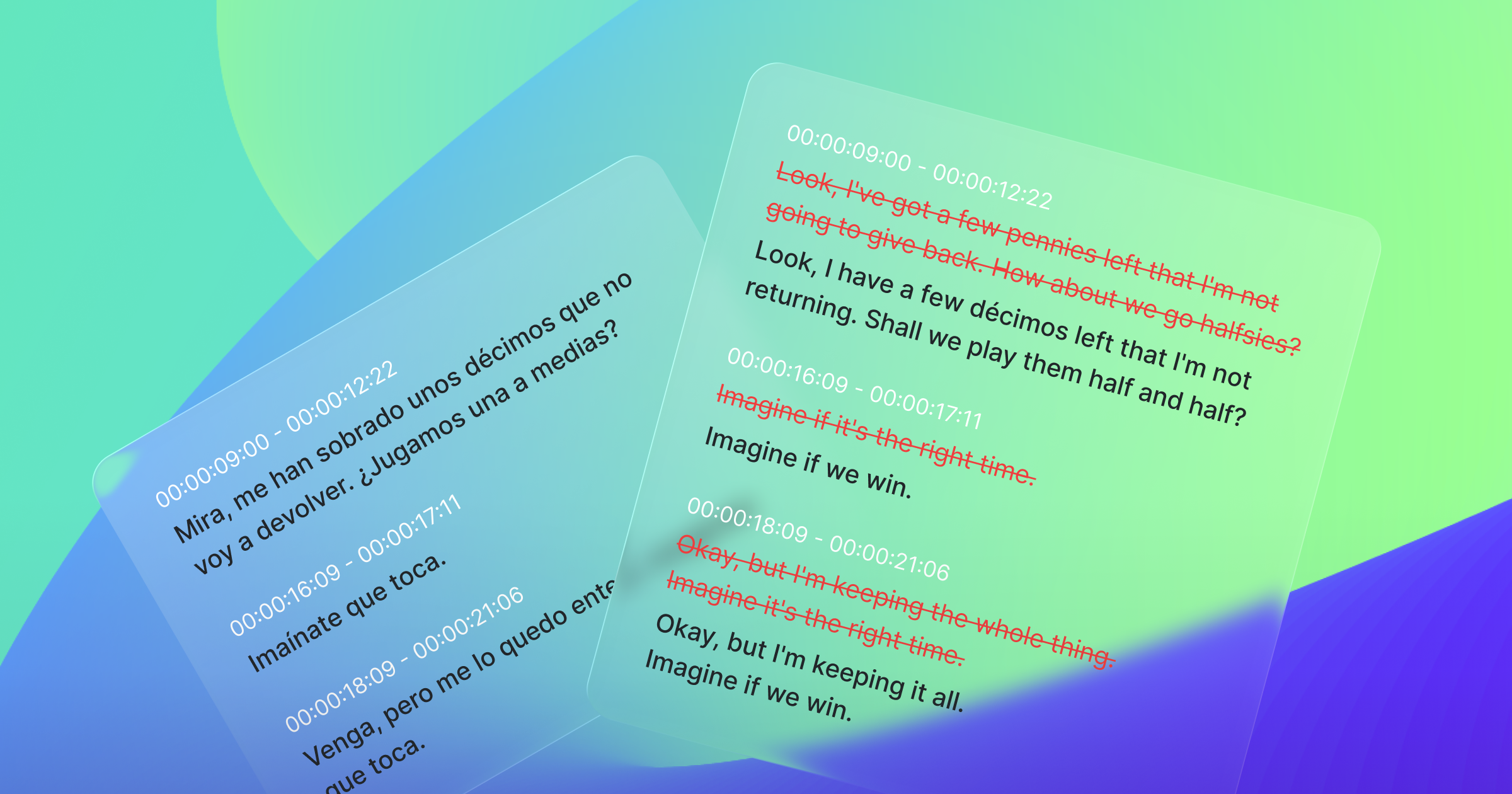

Neural Machine translation(NMT) is constantly improving and is helping translators to translate faster and more accurately. New functionalities make translation easier, and one of those functionalities is “lexically constrained translation”, which is to constrain the translation of specific source words to specific target words. For example, let’s say we translated “Mr. White” to French, and we got “Monsieur Blanc”, where “blanc” is a literal translation of color “white”. This is certainly not what we wanted and we would want “Monsieur White” instead. In this case, we can use lexically constrained NMT by providing “White” as a source constraint word and “White” as a target constraint word.

Many ideas have been suggested to incorporate the constraints in the translation, too many to review all in a single blog post. In this post, we will briefly review some of the approaches from constrained decoding methods and learning from the augmented datasets methods.

Constrained decoding

Constrained decoding, as the name suggests, is the generation of the constraints during the decoding step. This kind of approach is called “hard lexically constrained NMT”, as the constraints are forced to appear in the translated sentences. Usually, constrained decoding methods do not need to train additional models for lexically constrained NMT as the existing models can make use of constrained decoding to enable it.

Grid beam search(GBS)[1] is one of the earliest approaches to constrained decoding. In GBS, decoding steps and the number of generated constraint tokens(i.e., constraint coverage) form a grid, and one fixed-size beam is assigned per each decoding step per constraint coverage. Then, for each hypothesis in a beam, three search candidates are possible for the next step. The first is to generate the best token based on the probability from the model output. Another is to start generating the constraint if it’s not yet started. The third is to continue generating the constraint token if it already started to generate the constraint. This approach could guarantee to generate the constraints in the translated sentence, but the major drawback was decoding speed as the search space was proportional to the number of constraint tokens.

Dynamic beam allocation(DBA)[2] was suggested to mitigate the speed issue. Unlike in GBS where the number of beams changes per each step, in DBA, the number of beams is fixed to 1 and it uses an efficient algorithm to select the best hypotheses. Specifically, let’s say k is the beam size. Per each step, it collects candidate tokens from k-best tokens based on the probability from the model output, tokens that satisfy constraints, either by starting a constraint word or continuing a constraint word, and the best candidate token per each hypothesis. Then, it creates (C+1) bins, each with size k/(C+1), where C is the number of constraints, and allocates the best candidates to the bins based on the number of constraints generated. This way, DBA can efficiently track different hypotheses with different numbers of generated constraints, while maintaining the beam size.

Vectorized dynamic beam allocation(VDBA)[3] improves the idea of DBA to make it faster and more efficient. While the beam allocation in DBA is not suitable for batch processing, VDBA makes it possible by introducing a matrix structure to record the sentence number, score and number of unmet constraints for each hypothesis, and hypotheses with same sentence number and same number of unmet constraints are grouped. Within each group, hypotheses are sorted by score, and the best hypotheses from each group are chosen for the candidates. This algorithm can be efficiently implemented with multi-key sort. Another drawback of DBA was that it could track only one constraint word each time. Thus, even if a token does not satisfy the currently tracked constraint word, but satisfies another constraint word, it couldn’t recognize it and reset the tracking progress of the constraint word. VDBA utilizes trie data structure to keep track of multiple constraint words simultaneously and solves this problem. It achieved about 5 times faster decoding speed compared to DBA when batch size is equal to 20.

Learning from augmented datasets

In this approach, source data is augmented with target constraint data. As the dataset is changed, new models should be trained with the augmented data to support lexically constrained NMT. Contrary to the “hard lexically constrained NMT”, data augmentation methods do not force the constraints in the translated sentences but rather let the model learn to decide if the constraints should appear in the target sentences, and thus are called “soft lexically constrained NMT”. Usually, these methods do not incur longer inference time, as the inference step is the same as vanilla NMT, except that target constraints are augmented to the source sentences.

One of the first ideas for data augmentation was to augment the source data with target constraints either by replacing the source constraints to target constraints or appending target constraints to the source constraints[4]. In Dinu et al., dictionaries were used to extract the aligned word pairs from source sentence - target sentence pairs, and the aligned words were used to augment the source sentence. They used approximate matching to allow some variances between the words in the datasets and the words in the dictionaries. Along with replacing/appending, an additional stream was added to the augmented source sentences to indicate “code-switching”, i.e., if a word is of source constraints or of target constraints or irrelevant to the constraints. Although this approach is “soft”, the experimental results showed that it can achieve constraint coverage higher than 90%. They also showed that by using approximate matching, the model can learn to inflect the target constraints according to the context.

On the other hand, LeCA[5] suggested to sample constraints from target sentences, and append the constraints to the end of each source sentence. This sampling method is far simpler than using alignment or external dictionaries. It also introduces segment embeddings and new positional embeddings to differentiate the constraints from the source sentence. The experimental results show that LeCA also achieves high constraint coverage, sometimes over 99%.

While Dinu et al. showed the possibility that data augmentation method can handle inflection, Jon et al.[6] conducted an experiment to verify that the model can really handle the inflection of constraints with the English-Czech pair, where Czech is morphologically rich with much inflection. They kept the model architecture and data structure similar to those of LeCA[5], except that they added a step to lemmatize the target constraints, which is to extract the original form of a word. For example, the lemma of the word “gone” is “go”, and the lemma of the word “went” is also “go”. Specifically, after sampling constraints, they lemmatized the constraints using dictionaries, and then augmented the source data with those lemmatized constraints. From their results, it was proven that the models trained with lemmatized words can handle the inflection of constraints well with constraint coverage of higher than 90% while preserving the translation quality.

Conclusion

As we reviewed some lexically constrained NMT methods, you might wonder if XL8 is also providing this feature. In XL8, you can enable lexically constrained NMT using the “glossary” feature. We use various techniques including the ones described above to provide the most fluent translations satisfying the constraints. To find out how to utilize the “glossary” feature, please refer further to this blog post.

‣References

[1] Chris Hokamp and Qun Liu. 2017. Lexically constrained decoding for sequence generation using grid beam search. In Proceedings of ACL, pages 1535–1546, Vancouver, Canada.

[2] Matt Post and David Vilar. 2018. Fast lexically constrained decoding with dynamic beam allocation for neural machine translation. In Proceedings of NAACL 2018, pages 1314–1324, New Orleans, Louisiana. Association for Computational Linguistics.

[3] J. Edward Hu, Huda Khayrallah, Ryan Culkin, Patrick Xia, Tongfei Chen, Matt Post, and Benjamin Van Durme. 2019. Improved lexically constrained decoding for translation and monolingual rewriting. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 839–850, Minneapolis, Minnesota. Association for Computational Linguistics.

[4] Georgiana Dinu, Prashant Mathur, Marcello Federico, and Yaser Al-Onaizan. 2019. Training Neural Machine Translation to Apply Terminology Constraints. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pages 3063–3068, Florence, Italy. Association for Computational Linguistics.

[5] Guanhua Chen, Yun Chen, Yong Wang, and Victor O.K. Li. 2020. Lexical-constraint-aware neural machine translation via data augmentation. In Proceedings of the Twenty-Ninth International Joint Conference on Artificial Intelligence, IJCAI-20, pages 3587–3593. International Joint Conferences on Artificial Intelli-gence Organization. Main track.

[6] Josef Jon, João Paulo Aires, Dusan Varis, and Ondřej Bojar. 2021. End-to-End Lexically Constrained Machine Translation for Morphologically Rich Languages. In Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics and the 11th International Joint Conference on Natural Language Processing (Volume 1: Long Papers), pages 4019–4033, Online. A

Written By Hankyu Cho, Research Engineer at XL8 Inc.