After a few years of interruption due to the Covid pandemic, it’s refreshing to be enjoying and attending in-person conferences and live events again. One of the things I appreciate about being back in person is the feeling of internationalization, globalization, and meeting people from all around the world. However, this can come with some language barrier challenges, leading to lower engagement and a lack of inclusivity or participation at an event. Unmistakably, AI is playing a transformative role by simplifying communications and workflows in the live events space, the broadcast market, and the media industry in general. AI technology offers capabilities that can automate and enhance various aspects of localization, from language translation to content moderation, everything in between, and beyond.

Bridging Communication Gaps

Offering professional interpreters and translators to interpret live while speakers present certainly helps turn attendees into active participants, but often comes with a cost barrier. Interpreters may also struggle with specialized content, unfamiliar terminology, or simply experience fatigue from a long-duration event due to the intense focus and cognitive abilities required. Using AI for translating and interpreting live events can be a powerful application of natural language processing and machine learning technologies. Both interpreters and AI translation offer similarities in that they effectively bridge communication gaps; both have their unique strengths and limitations. AI is delivering consistent performance and accuracy, supports a wide range of languages, accents, and dialects, and is rapidly advancing to offering more sophisticated approaches to translation.

AI Language Processing Models

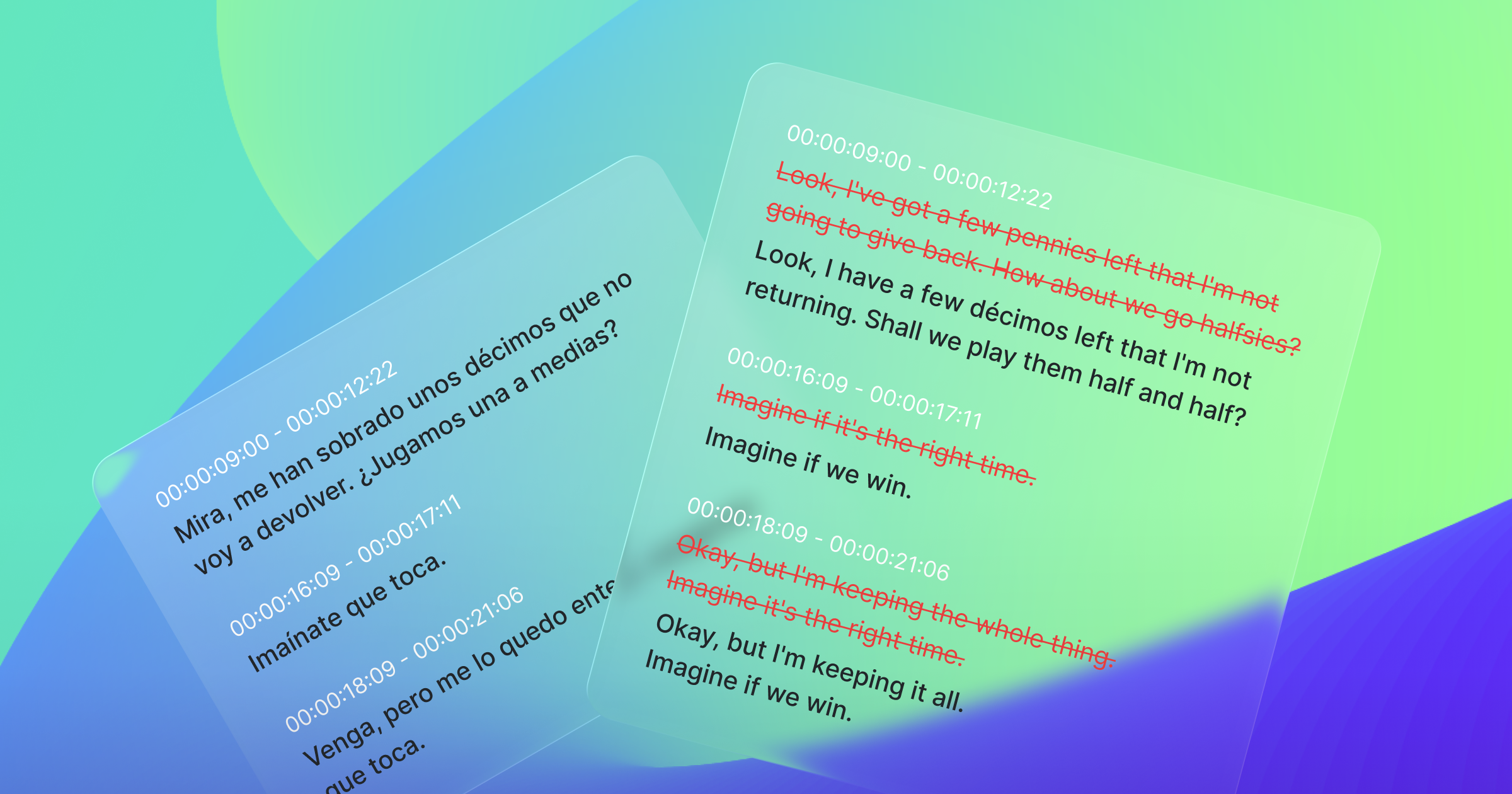

Simultaneous interpretation is particularly useful for live events where multiple languages are spoken. AI-powered tools can listen to a speaker, transcribe the speech, translate it into another language, and offer subtitles or captions – all in real-time. Along with language detection to help determine what language is being spoken, AI supports automatic language switching for the audience.

Nowadays, the increasing size of Natural Language Processing (NLP) models allows the models to understand cultural expressions, idioms, and even tone, helping to create more accurate translations and subtitles. Live subtitles or captions are generated in real-time making the content more accessible to a larger global audience, suitable for live event broadcasts. AI also has the capacity (with proper training and calibration) to identify and translate specific event-related terminology, which reduces misinterpretation and greatly improves the accuracy of live event translation.

Automatic Speech Recognition (ASR) can be used for the live transcription of multilingual presentations, speeches, and even conversations, making it especially useful for real-time translation and interpretation.

AI Application in Live Broadcast Content Moderation

Automated content moderation can quickly and efficiently address issues including profanity, or harmful content. This can be an expensive and tedious task for a human moderator, so automated and machine-learning techniques can offer a more efficient and cost-effective approach. In addition to the workflow advantages, it’s about unlocking efficiencies and creating a more integrated content experience for the audience.

At XL8, we’ve been working in the live event subtitling market alongside many online event management platforms for some years. By providing localized content with automated AI translation, interpretation, and transcription, our localization tools help break down language barriers and make real-time communications more accessible. Now that we’re back to in-person live events and live broadcasts, we’re finding an opportunity to use our knowledge and technology to build best practices for multilingual in-person events that will benefit from these types of automated localization services.

We recently worked with event managers of the Nature Forum, where XL8’s EventCAT provided translated subtitles to event participants, without needing human interpreters. Key takeaways for our client were the cost savings, the impressive accuracy that our AI provided, and the obvious enthusiasm from event participants. This particular event was one of the instances that showcased an application that made the event more accessible and inclusive, ultimately enhancing collaboration and understanding. Another recent use-case powered by XL8’s EventCAT was the much anticipated multi-cultural event at The Leeum, also known as the Samsung Museum of Art. This event featured real-time translation of exhibit presentations into eight languages, allowing visitors to experience in their preferred language on their mobile phones.

A part of this success was achieved through a partnership with OnOffMix, one of the largest online event platforms in Korea, which aims to connect the online and offline worlds together. Through the event platform, XL8’s translation technology is now more widely available for audiences and users with a variety of localization needs in international conferences.

The localization process augmented by AI is enabling companies to adapt and thrive in new avenues of global content. It’s empowering localization and event production teams with automated and advanced tools to streamline workflows. Technology has historically been democratizing our lives. AI is once again advancing our lives by helping us make better content, making content more accessible, and allowing us to talk about content without a language barrier.